Essayer OR - Gratuit

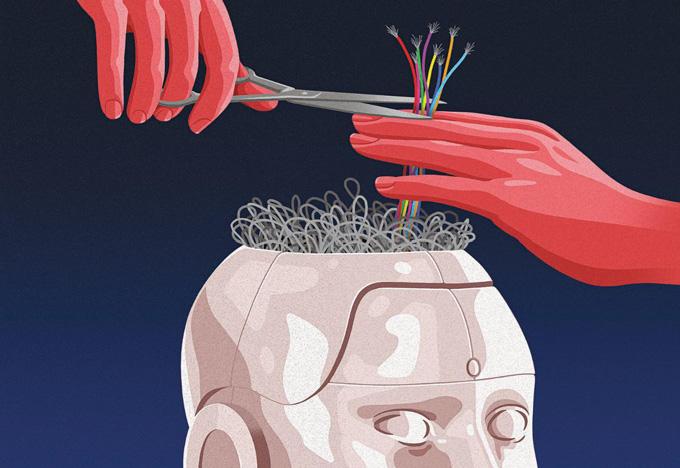

Scientists find a new way to spot AI 'hallucinations'

TIME Magazine

|July 15, 2024

TODAY’S GENERATIVE ARTIFICIAL INTELLIGENCE (AI) tools often confidently assert false information.

Computer scientists call this behavior “hallucination,” and it has led to some embarrassing public slip-ups. In February, Air Canada was forced by a tribunal to honor a discount that its customer- support chatbot had mistakenly offered to a passenger. In May, Google made changes to its new “AI overviews” search feature, after it told some users that it was safe to eat rocks. And in June 2023, two lawyers were fined $5,000 after one of them used ChatGPT to help him write a court filing. The chatbot had added fake citations to the submission, which pointed to cases that never existed.

But at least some types of AI hallucinations could soon be a thing of the past. New research, published June 19 in the peer-reviewed journal Nature, describes a new method for detecting when an AI tool is likely to be hallucinating. The method is able to discern between correct and incorrect AI- generated answers approximately 79% of the time, which is about 10 percentage points higher than other leading strategies. The results could pave the way for more- reliable AI systems in the future.

Cette histoire est tirée de l'édition July 15, 2024 de TIME Magazine.

Abonnez-vous à Magzter GOLD pour accéder à des milliers d'histoires premium sélectionnées et à plus de 9 000 magazines et journaux.

Déjà abonné ? Se connecter

Listen

Translate

Change font size